How do medical institutions ensure the ethical use of artificial intelligence?

Fact checked by Derick Wilder

Have you ever considered all the ways we use artificial intelligence (AI) that no one really thinks about anymore? (Here’s to you, Siri!) Once something becomes rote, it tends to fade from our consciousness. This is especially common with AI in the medical field.

AI tools generate content, sort information, and perform tasks.

Machines that alert doctors when a patient’s oxygen level gets too low? AI.

Automated EKG readings? Also AI.

Tracking these data points, among many others, has become routine in the healthcare world.

“Soon after people become familiar with a new AI technology, it seems as if many often forget that it’s AI,” says Jonathan Handler, MD, senior innovation fellow at OSF HealthCare.

Yet AI is used in myriad medical settings, from individualizing treatments in the clinic to discovering patterns in the lab.

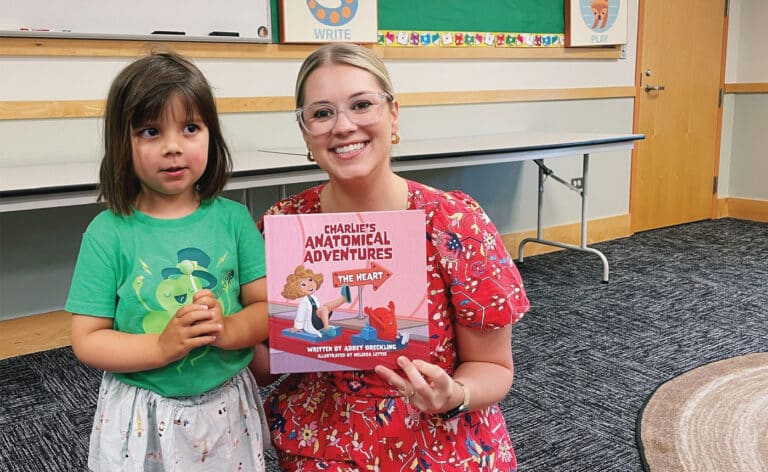

The team at OSF is also exploring and implementing newer technologies powered by AI. For example, one AI pilot program listens to the conversation between doctor and patient, then automatically writes the first draft of a clinical note. OSF doctors use another AI platform to help identify diabetic retinopathy (damage to the retina caused by diabetes). And yet another pilot program uses AI to better detect precancerous polyps during colonoscopies.

Sachin Shah, MD, University of Chicago Medicine chief medical information officer, notes several ways AI finds its way into current medical practices:

• Dramatically reduces documentation burdens for clinicians

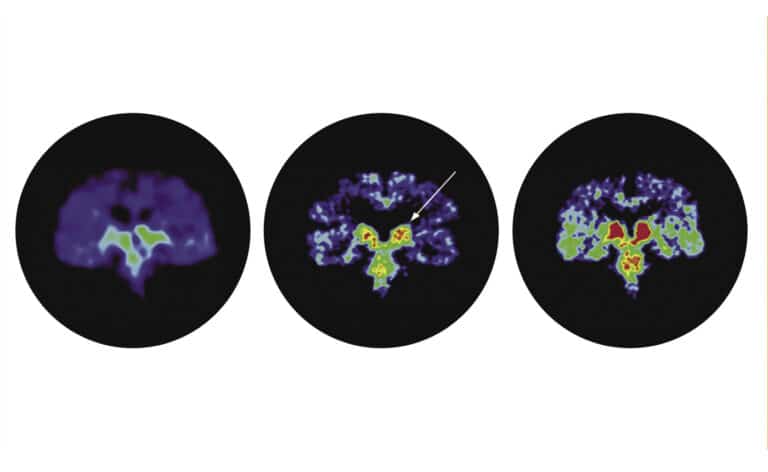

• Searches for anomalies in medical images to improve diagnostics

• Predicts patient outcomes for readmission risk and disease progression

• Analyzes large datasets to look for medical patterns

• Determines patient populations

• Customizes treatment plans

• Speeds up clinical trial participant recruitment

Ultimately, Shah says, “this approach aims to reduce the administrative burden on healthcare providers, allowing them to focus more on patient care.” It also enables “more proactive clinical interventions.”

Doctors and hospitals are already seeing the benefits of AI usage for diagnoses like cancer. Maryellen Giger, PhD, a professor of radiology at the University of Chicago, developed a technology in the early 2010s that hospitals and imaging centers throughout the country use to detect cancerous lesions on breast MRIs.

In the UK, Oxford Drug Design, a startup focusing on new drug discoveries, uses AI at the molecular level to study and manufacture new medications.

Alan D. Roth, PhD, the company’s CEO, is an organic chemist. He says the company uses a computational AI program called Synth AI to turn current chemistry knowledge into new molecules that can fight disease. Currently, the team is focusing on cancer and resistant lethal infections.

“Our computational AI results in molecules that can be made with actual current chemistry, and that can be made in an economically efficient manner, so that if they become drugs, pharmaceutical companies can afford to manufacture them,” Roth says.

Oxford Drug Design already works with the University of Cambridge and the University of Liverpool, as well as the National Institutes of Health and the National Cancer Institute in the U.S.

“Ultimately, though, I’ll bet — or at least hope — that AI will help improve the quality, accessibility, equity, and experience of care for all.”

They’ve also recently entered into a partnership with the Cancer Research UK Scotland Institute to work on therapeutics for cancer treatment — so the company’s approach to AI usage is pretty clear. But for medical teams and institutions without such clear-cut boundaries, the decision to use AI comes with a lot of discussion.

Overseeing AI usage

“It has to start with strategic priorities and clinical need,” Shah says. “Where are the specific areas where AI can help meet a need, build a capability, and significantly improve patient outcomes or operational efficiency?”

University of Chicago Medicine, which has a dedicated bioethics committee, has its own process for answering these questions. First, the team analyzes the need for AI at the health system level, determining what the needs are and how to prioritize them. Next, clinical and operational leaders assess how AI might impact workflows and patient outcomes. If it all looks good to this point, the AI system undergoes pilot testing in a controlled environment to see how well it works, what impacts there might be, and how it integrates into existing technology. Then it’s just continual monitoring of the system to be sure it stays effective and relevant. Costs to run the AI program play into the decision as well.

“It’s essential to involve key stakeholders from the earliest design and assessment phase, including clinicians, patients, operational leaders, and IT teams,” Shah says. “A detailed cost-benefit analysis evaluating the financial implications and resource requirements associated with AI adoption is essential, and part of the process required to secure funding.”

Handler agrees that many are putting systems in place to create policies, educate staff, and encourage appropriate AI usage. To that end, OSF last year formed a Generative AI committee to facilitate the safe, fair, and effective use of AI.

“Much of healthcare is a high-intensity, high-risk, critical service. Since medical teams often feel severely time pressured, AI deployments that teams perceive will save them time so they can better perform their most critical tasks may be more likely to find rapid uptake,” Handler says.

It’s important for healthcare facilities to consider transparency, as well, with patients and clinicians. One screw-up from an AI system can lead to the degradation of trust in an entire medical facility.

“Soon, what takes us a prolonged amount of time today is going to be reduced by the computational power, which is constantly improving,”

“This means engaging multidisciplinary teams, including clinicians, data scientists, ethicists, and other key stakeholders from the design and governance stage through implementation to evaluate and monitor AI applications,” Shah says.

More and more major healthcare institutions appear to be developing formal oversight committees like those at OSF and University of Chicago Medicine. But Handler and Shah agree that it’s not industry standard yet — though if the risks of AI increase, so will the need for checks and balances.

“As we go forward, will we see this become a standard?” Handler asks. “It’s hard to say, but I suspect that, like so many other technologies in healthcare, usage with greater risk will have greater oversight.”

Of course, it’s not the easiest thing to create an oversight board. Shah says that subcommittees are integral to monitor the development, validation, and security of AI models; compliance; risk mitigation; financial requirements; and resource allocation. The committee also needs to be cross-departmental.

“Interdisciplinary collaboration across various departments and stakeholders — including clinical, operational, legal, regulatory compliance, privacy, and IT — are critical to maintaining an aligned, holistic oversight approach,” Shah says.

In addition to all that, safeguards need to be put into place. Shah says that University of Chicago Medicine already employs quite a few, including robust measures to protect data privacy and security of patient information, mitigation of AI bias to ensure equity in care, rigorous pre-testing of any AI system, and regulatory compliance overall.

The team also works to ensure AI systems can be explained clearly and easily to patients and clinicians — something that Handler says might help facilitate AI’s success in healthcare.

“To ensure safety, people often want to understand how the AI works and to have a comprehensible explanation for any guidance it provides,” he says. “This can prove challenging when the AI uses very complex processing and many data elements to generate its output.”

In the early days

Handler says that work to address that challenge is already in progress. But where do we go from here? What will the future of AI look like in healthcare? If you ask Roth, this is only the beginning.

“Soon, what takes us a prolonged amount of time today is going to be reduced by the computational power, which is constantly improving,” Roth says. “Patients and physicians will continue to benefit.”

“To ensure safety, people often want to understand how the AI works and to have a comprehensible explanation for any guidance it provides.”

In his line of work specifically, Roth believes AI efficiencies will significantly cut down on development timelines, potentially shaving off years of testing prior to deployment of a new drug.

Shah expects some efficiencies and improvements to come in the form of speedy diagnostic accuracy, especially in oncology and genomics. He also thinks we’ll see better predictive analytics in disease onset and progression, leading to earlier diagnoses and treatments, or better personalized preventative care protocols.

For patients in particular, Shah anticipates they’ll eventually feel more empowered and efficient when working with the healthcare system, and have more self-efficacy and knowledge to manage more themselves. And because AI has the power to streamline all sorts of administrative and operational tasks, patients may end up paying less for healthcare and having more robust access to physicians.

But regardless of how it moves forward, AI will surely continue to shake things up. “I’d still wager that AI will be far more pervasive and disruptive in the coming years than it has ever been,” Shah says.

And there’s plenty more to figure out. “Ultimately, though, I’ll bet — or at least hope — that AI will help improve the quality, accessibility, equity, and experience of care for all,” Handler says.

And just as has happened already with implementations of AI-backed tools, he predicts many of us will probably forget all of the dramatic changes that AI will spur in our everyday lives.

Originally published in the Fall 2024/Winter 2025 print issue.

Jennifer is an award-winning writer and bestselling author. She is currently dreaming of an around-the-world trip with her Boston terrier.